38 mutual information venn diagram

Mutual information is a measure of the inherent dependence expressed in the joint distribution of X and Y relative to the joint distribution of X and Y under the assumption of independence. Mutual information, therefore, measures dependence in the following sense: I ( X; Y) = 0 if and only if X and Y are independent random variables. 8For the following expressions: 4 3 5 Fshows how many students play ( B Mutual Exclusivity, Venn Diagrams and Probability Level 1 - 2 1. For a class of 20 students, the Venn diagram on the right

Mutual information: | | ||| | |Venn diagram| for various information measures associ... World Heritage Encyclopedia, the aggregation of the largest online ...

Mutual information venn diagram

Displaying all worksheets related to - Shade The Venn Diagrams. Worksheets are Shading, Answers to work on shading venn diagrams, Probability venn diagrams, Part 1 module 2 set operations venn diagrams set operations, Mutual exclusivity venn diagrams and probability, Create a venn diagram using the given, Venn diagrams, Sets and venn diagrams. Harry places his information bits in locations 1-4 in the Venn diagram. [3] He then fills in the parity bits 5-7 by making sure there are an even number of bits in circles A, B, and C. He can then send four bits of information to Sally, for example 0100 101 who can fill in the bits on her own Venn diagram. MUTUAL INFORMATION . On an average we require H(X) bits of information to specify one input symbol. ... An easy method, of remembering the various relationships, is given in Fig 4.2.Althogh the diagram resembles a Venn-diagram, it is not, and the diagram is only a tool to remember the relationships. That is all.

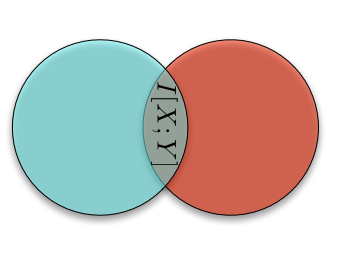

Mutual information venn diagram. The Venn diagram below provides a simple interpretation of the factors and influences operating in Psychological Contracts. In the Psychological Contract Venn diagram: vc = visible contract - the usual written employment contractual obligations on both sides to work safely and appropriately in return for a rate of pay or salary, usually holidays also, plus other employee … Venn diagram showing additive and subtractive relationships of various information measures associated with correlated variables ... In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual dependence between the two variables. More specifically, it quantifies the "amount of information" (in units such as … Arial MS Pゴシック Times Times New Roman Blank Presentation Microsoft Equation 3.0 Importance-Driven Time-Varying Data Visualization Chaoli Wang, Hongfeng Yu, Kwan-Liu Ma University of California, Davis Importance-Driven Volume Rendering Differences Questions Related Work Importance Analysis Information Theory Relations with Venn Diagram ... An information diagram is a type of Venn diagram used in information theory to illustrate relationships among Shannon's basic measures of information: entropy, joint entropy, conditional entropy and mutual information. Information diagrams are a useful pedagogical tool for teaching and learning about these basic measures of information.

We may use an information diagram, which 14The name mutual information and the notation I(X;Y) was introduced by [Fano, 1961, Ch 2]. 49. is a variation of a Venn diagram, to represent relationship between Shannon's information measures. This is similar to the use of the Venn diagram to represent relationship between probability measures. These Sociogram Maker to visualize and understand social relationships among individuals. Sociogram Template and steps to create Sociograms quickly and easily. Visit Creately.com to find templates for culturagrams, genograms, and ecomaps. Venn diagram representation of mu-tual information. (A) The pairwise mutual information I(G 1; G 2) corresponds to the intersection of the two circles and is always nonnegative. (B) The three-way mutual information I(G 1; G 2; G 3) corresponds to the intersection of the three circles, but it is not always nonnegative. In the theory of probability and statistics, a Bernoulli trial (or binomial trial) is a random experiment with exactly two possible outcomes, "success" and "failure", in which the probability of success is the same every time the experiment is conducted. It is named after Jacob Bernoulli, a 17th-century Swiss mathematician, who analyzed them in his Ars Conjectandi (1713).

If we refer back to our original Venn diagram graphic, we can pull these two algorithms together to predict the importance of the network feature in predicting the success or failure of the SLE metric. The mutual information theory, coupled with the Pearson correlation, is a building block for AI technologies. The Venn-Diagram of the mutual information. If the entropy H ( X ) is regarded as a measure of uncertainty about the random variable X then the mutual information I ( X, Y ) measures how much the ... Viewed 69 times 0 As defined on Wikipedia, Venn diagram shows additive and subtractive relationships among various information measures associated with variables X and Y. From this picture it is easily to see that I ( X; Y) = H ( X) + H ( Y) − H ( X, Y). When the number of variables increases, things got tough. For example, given: An introduction to quantum Venn diagrams that you may like is given by Chris Adami. Here is an example of a quantum system Q measured by a classical measurement device A that consists of two pieces A 1 and A 2. The shared information (central region) is always zero, which is what prevents cloning of a quantum state. Share.

25.01.2022 · Our website showcases a Venn diagram depiction of digital marketing and what it entails. The three largest circles represent Social Media Marketing, Search Engine Marketing and Content Marketing ...

It is a diagram that shows all the possible logical relationships between a finite assemblage of sets or groups. It is also referred to as a set diagram or logic diagram. A Venn diagram uses multiple overlapping shapes (usually circles) representing sets of various elements.

Entropy and Mutual Information Erik G. Learned-Miller Department of Computer Science University of Massachusetts, Amherst Amherst, MA 01003 September 16, 2013 Abstract This document is an introduction to entropy and mutual information for discrete random variables. It gives their de nitions in terms of prob-abilities, and a few simple examples. 1

As information-theoretic concept: each atom is a mutual information of some of the variables conditioned on the remaining variables. Using information-theoretic concepts For example, we can immediately read from the diagram that the joint entropy of all three variables \(H(A, B, C)\) , that is the union of all three, can be written as:

Information measures: mutual information 2.1 Divergence: main inequality Theorem 2.1 (Information Inequality). D(PYQ)≥0 ; D(PYQ)=0 i P=Q ... The following Venn diagram illustrates the relationship between entropy, conditional entropy, joint entropy, and mutual information. 24.

For better understanding, the relationship between entropy and mutual information has been depicted in the following Venn diagram, where the area shared by the two circles is the mutual information: Properties of Mutual Information. The main properties of the Mutual Information are the following: Non-negative: \(I(X; Y) \geq 0 \)

It is based on a Venn diagram-type approach. There exist many expressions for this n-variable mutual information, all of which are same classically but differ when conditional entropies are generalized to quantum level. For a multiparticle system, one can make measurement on one particle or on more than one particle to probe the different ...

2.3 RELATIVE ENTROPY AND MUTUAL INFORMATION The entropy of a random variable is a measure of the uncertainty of the random variable; it is a measure of the amount of information required on the average to describe the random variable. In this section we introduce two related concepts: relative entropy and mutual information.

Now, analogous to the Taylor diagram is our mutual information diagram, \爀屮Where a given variable is plotted radially, with ra\ius equal to the square root entropy and the angle with the X axis is given by the normalized mutual information.\爀屮\爀屮\爀屮\爀屮\爀對屮\爀屮FIX R to R_{XY}\爀屮Remove CRMS for RMS ...

We all know the usual Venn diagram for mutual information: source Wikipedia. The visualization and description of joint entropy H (X,Y) make it appear as though it is the same as mutual information I (X;Y), which of course it is not.

Venn diagrams of (conditional) mutual information and interaction information. The analogy between entropies and sets should not be overinterpreted since the interaction information can also be ...

The entropy of a pair of random variables is commonly depicted using a Venn diagram. This representation is potentially misleading, however, since the multivariate mutual information can be negative. This paper presents new measures of multivariate information content that can be accurately depicted using Venn diagrams for any number of random variables.

Figure 3: The Venn diagram of some information theory concepts (Entropy, Conditional Entropy, Information Gain). Taken from nature.com . May 20, 2021 June 25, 2021 Tung.M.Phung cross entropy loss , data mining , Entropy , information theory

Definition The mutual information between two continuous random variables X,Y with joint p.d.f f(x,y) is given by I(X;Y) = ZZ f(x,y)log f(x,y) f(x)f(y) dxdy. (26) For two variables it is possible to represent the different entropic quantities with an analogy to set theory. In Figure 4 we see the different quantities, and how the mutual ...

MUTUAL INFORMATION . On an average we require H(X) bits of information to specify one input symbol. ... An easy method, of remembering the various relationships, is given in Fig 4.2.Althogh the diagram resembles a Venn-diagram, it is not, and the diagram is only a tool to remember the relationships. That is all.

Harry places his information bits in locations 1-4 in the Venn diagram. [3] He then fills in the parity bits 5-7 by making sure there are an even number of bits in circles A, B, and C. He can then send four bits of information to Sally, for example 0100 101 who can fill in the bits on her own Venn diagram.

Displaying all worksheets related to - Shade The Venn Diagrams. Worksheets are Shading, Answers to work on shading venn diagrams, Probability venn diagrams, Part 1 module 2 set operations venn diagrams set operations, Mutual exclusivity venn diagrams and probability, Create a venn diagram using the given, Venn diagrams, Sets and venn diagrams.

0 Response to "38 mutual information venn diagram"

Post a Comment